The Hidden Risks of Unreliable Data in IIoT

October 2, 2018

by Shashi Sastry, Chief Product Officer

One of the key goals of Industry 4.0 is business optimization, whether it’s from predictive maintenance, asset optimization, or other capabilities that drive operational efficiencies. Each of these capabilities is driven by data, and their success is dependent on having the right data at the right time fed into the appropriate models and predictive algorithms.

Too often data analysts find that they are working with data that is incomplete or unreliable. They have to use additional techniques to fill in the missing information with predictions. While techniques such as machine learning or data simulations are being promoted as an elixir to bad data, they do not fix the original problem of the bad data source. Additionally, these solutions are often too complex, and cannot be applied to certain use cases. For example, there are no “data fill” techniques that can be applied to camera video streams or patient medical data.

Any data quality management effort should start with collecting data in a trusted environment. This in turn implies that the data sources (machines, IoT devices, etc.) and the data collection processes are all trusted.

Trusting Data from IIoT Devices

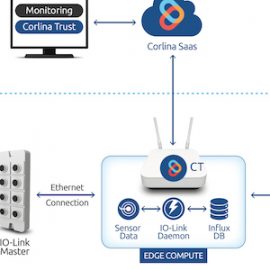

When a new device, is added to a connected factory system, it’s onboarded by authenticating it, typically by using its IP address and a password. Then it’s authorized to communicate data over the network. The flow of data across the network is typically protected through the use of a secure protocol like TLS or SSL, so there is a secure channel established for ongoing data transfer. The foundation is set for trustworthy data.

But as time moves forward, the dynamic nature of an industrial environment means that this initial foundation must be renewed over time. For example, unless there are proactive checks to validate the ongoing authenticity of the device, or regular password updates, the device may have been compromised. The original authentication of the trustworthiness of the device as a data source is no longer valid. A method for continuously updating this verification is required.

Data generation and communication from these devices is also key. Many sensors only communicate “events” or changes from the norm. In the case of a temperature sensor, if temperatures in a production environment are being maintained in a tight, valid range, the sensor may not be transmitting data for a significant period of time, say days or even weeks. But this quiet period can be difficult to interpret and impossible to rely on. What if the sensor has lost connection or malfunctioned? You need a way to validate the proper operation of the sensor itself to know for certain that you aren’t missing valid data just because none has been received.

And while sensor downtime may be unavoidable, rapid insight into the state of the sensor also provides benefits. You’ll have the ability to act more quickly to rectify any operational issue with the sensor. And you’ll have a measure of the actual time period for which data were not received due to sensor malfunction.

Finally, a trusted device is one that is working in its “right” operational state. That implies more than that the device is working and properly authenticated. For more complex devices, like a camera, it includes a measure of its complete set-up. For example, for a visual data stream that is being collected of a particular process or in a specific area to measure quality, a trust measure indicates that the camera is delivering the information it’s intended to track. If the camera has been bumped, whether intentionally or not, you’ll know that the collected data are invalid, should not be included in your specific analytics, and you again must take action to bring your device back into its trusted state.

Trusting the Data Flows Across the IIoT Infrastructure

Data reliability can also be impacted by other elements of the IIoT infrastructure. Gateways or switches, for example, can introduce the same problems as a sensor — has the Gateway that was originally authorized been compromised in some way? Has a switch gone down so that data cannot be transported from a sensor to the analytics engine? Every transmission point creates the potential for disruption to predictable data flows.

And then there is the network itself.

Network Connectivity

Most sensors connect to their network via WiFi protocols, so keeping abreast of connectivity status can be difficult. Without the appropriate tools, monitoring whether all devices are connected and quickly rectifying issues when they do occur is impossible.

But the issues presented by lack of connectivity or changing connectivity go further. When a disconnected device re-establishes connectivity or changes its connection point, it needs to be re-authenticated to ensure the integrity of the data, maintaining system-level trust.

Network Bandwidth

It’s obvious to any network or system designer that the available bandwidth must be sufficient to transport the information the system is implemented to collect. But it’s equally apparent that unlimited bandwidth is far from free, so industrial networks must be deployed with a practical level of capacity.

When data flows are unpredictable, particularly in operational settings when data are only transmitted based on event-based triggers, it can be difficult for both system designers and operational managers to ensure that their bandwidth is optimized. If not optimized, these bursts in the data stream can easily overwhelm the available bandwidth, again leading to data loss and inaccurate analytic results.

Trusted Destinations

Finally, there is the last mile of the journey for operational data to reach its authorized data store and analytics environment. While data from sensors or actuators may seem less critical than customer or financial data, there continues to be the need to protect and monitor the uses of the valuable data you’ve collected. In particular, video data that includes images of employees may be restricted in its usage, and the restrictions frequently vary by state and country. The ability to set and maintain policies on data end points is the critical final step in establishing reliability of your data throughout its lifecycle.

To learn more about achieving Data Reliability, download our white paper.

Bad Data Limits Upside Potential

Calculation of the trustworthiness of the data requires that we have to measure all points – systems or devices generating the data, the network transporting the data, the communication links, the process of collecting the data, and the people associated in any or all of these points to achieve the business goals we set for our initiatives.

Data reliability in industrial systems is key to maximizing the returns of IIoT projects.

This post was originally published on IIoT World.

Related articles

We were very excited to learn on Friday that the Corlina System of Trust has received a 2019 IoT Evolution Industrial IoT Product of the Year Award from IoT Evolution World, the leading magazine and Web site covering IoT technologies. It’s very exciting when your product receives this type of

In our recent articles, we’ve talked about taking a different approach to embarking on building IIoT capabilities, one that protects organizations from pilot purgatory by focusing on a specific challenge in the manufacturing environment and yielding ROI quickly. It’s not a rip-and-replace method requiring massive investments in new equipment. It’s

Solution Overview Corlina’s brownfield monitoring solution blueprint is intended to enable monitoring and decision-making based on information captured from already-in-place equipment. These existing systems are often implemented on closed architectures and/or closed data buses for reliability reasons, or they may not include any embedded sensors whatsoever. Corlina provides a cost-effective